8. Open Peer Review, Metrics, and Evaluation

What is it?

To be a researcher is to find oneself under constant evaluation. Academia is a "prestige economy", where an academic's worth is based on evaluations of the levels of esteem within which they and their contributions are held by their peers, decision-makers and others (Blackmore and Kandiko, 2011). In this section it will therefore be worthwhile distinguishing between evaluation of a piece of work and evaluation of the researcher themselves. Both research and researcher find themselves evaluated through two primary methods: peer review and metrics, the first qualitative and the latter quantitative.

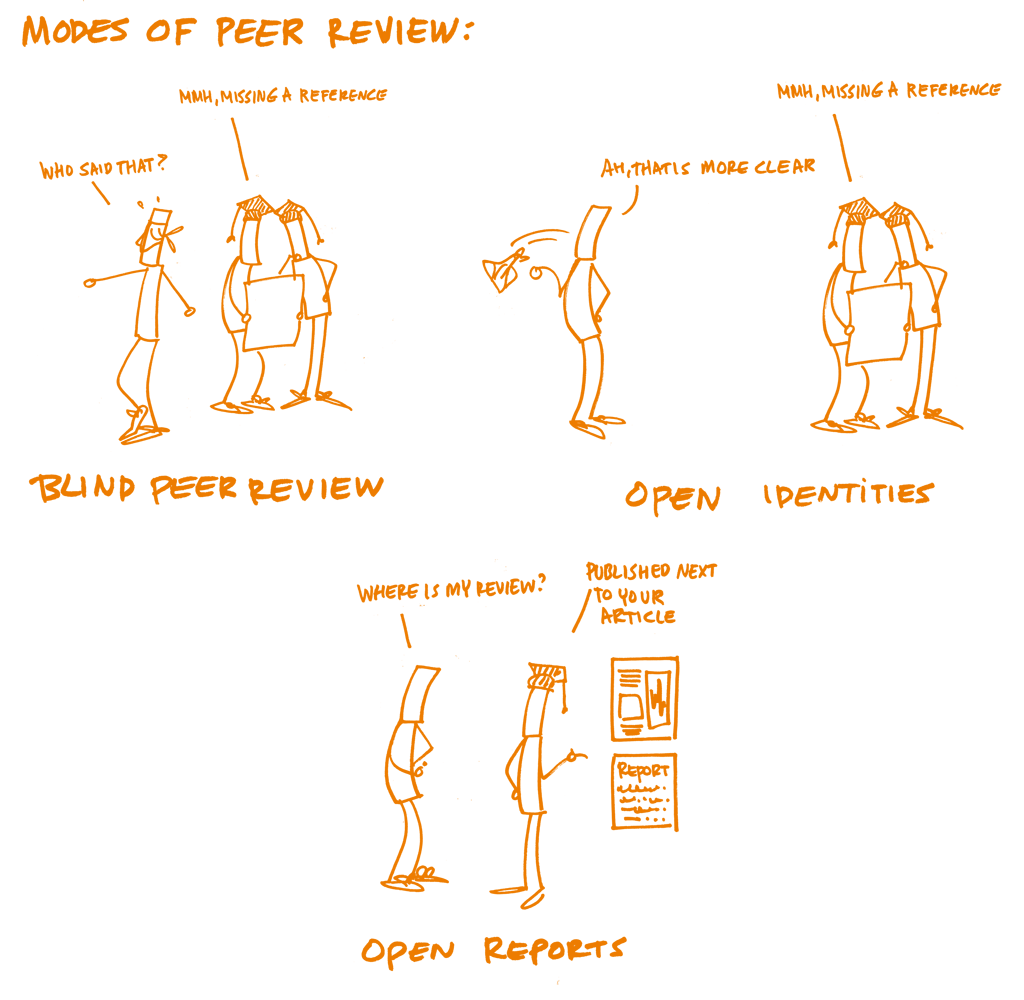

Peer review is used primarily to judge pieces of research. It is the formal quality assurance mechanism whereby scholarly manuscripts (e.g., journal articles, books, grant applications and conference papers) are made subject to the scrutiny of others, whose feedback and judgements are then used to improve works and make final decisions regarding selection (for publication, grant allocation or speaking time). Open Peer Review means different things to different people and communities and has been defined as "an umbrella term for a number of overlapping ways that peer review models can be adapted in line with the aims of Open Science" (Ross-Hellauer, 2017). Its two main traits are “open identities”, where both authors and reviewers are aware of each other’s identities (i.e., non-blinded), and “open reports”, where review reports are published alongside the relevant article. These traits can be combined, but need not be, and may be complemented by other innovations, such as “open participation”, where members of the wider community are able to contribute to the review process, “open interaction”, where direct reciprocal discussion between author(s) and reviewers, and/or between reviewers, is allowed and encouraged, and “open pre-review manuscripts”, where manuscripts are made immediately available in advance of any formal peer review procedures (either internally as part of journal workflows or externally via preprint servers).

Once they have passed peer review, research publications are then often the primary measure of a researcher's work (hence the phrase "publish or perish"). However, assessing the quality of publications is difficult and subjective. Although some general assessment exercises like the UK's Research Excellence Framework use peer review, general assessment is often based on metrics such as the number of citations publications garner (h-index), or even the perceived level of prestige of the journal it was published in (quantified by the Journal Impact Factor). The predominance of such metrics and the way they might distort incentives has been emphasised in recent years through statements like the Leiden Manifesto and the San Francisco Declaration on Research Assessment (DORA).

In recent years “Alternative Metrics” or altmetrics have become a topic in the debate about a balanced assessment of research efforts that complement citation counting by gauging other online measures of research impact, including bookmarks, links, blog posts, tweets, likes, shares, press coverage and the like. Underlying all of these issues with metrics is that they are very produced by commercial entities (e.g., Clarivate Analytics and Elsevier) based on proprietary systems, which can lead to some issues with transparency.

Rationale

Open peer review

Beginning in the 17th century with the Royal Society of London (1662) and the Académie Royale des Sciences de Paris (1699) as the privilege of science to censor itself rather than through the church, it took many years for peer review to be properly established in science. Peer review, as a formal mechanism, is much younger than many assume. For example, the journal Nature only introduced it in 1967. Although surveys show that researchers value peer review they also think it could work better. There are often complaints that peer review takes too long, that it is inconsistent and often fails to detect errors, and that anonymity shields biases. Open peer review (OPR) hence aims to bring greater transparency and participation to formal and informal peer review processes. Being a peer reviewer presents researchers with opportunities for engaging with novel research, building academic networks and expertise, and refining their own writing skills. It is a crucial element of quality control for academic work. Yet, in general, researchers do not often receive formal training in how to do peer review. Even where researchers believe themselves confident with traditional peer review, however, the many forms of open peer review present new challenges and opportunities. As OPR covers such a diverse range of practices, there are many considerations for reviewers and authors to take into account.

Regarding evaluation, current rewards and metrics in science and scholarship are not (yet) in line with Open Science. The metrics used to evaluate research (e.g. Journal Impact Factor, h-index) do not measure - and therefore do not reward - open research practices. Open peer review activity is not necessarily recognized as "scholarship" in professional advancement scenarios (e.g. in many cases, grant reviewers don’t consider even the most brilliant open peer reviews to be scholarly objects unto themselves). Furthermore, many evaluation metrics - especially certain types of bibliometrics - are not as open and transparent as the community would like.

Under those circumstances, at best Open Science practices are seen as an additional burden without rewards. At worst, they are seen as actively damaging chances of future funding and promotion as well as tenure. A recent report from the European Commission (2017) recognizes that there are basically two approaches to Open Science implementation and the way rewards and evaluation can support that:

Simply support the status quo by encouraging more openness, building related metrics and quantifying outputs;

Experiment with alternative research practices and assessment, open data, citizen science and open education.

More and more funders and institutions are taking steps in these directions, for example by moving away from simple counts, and including narratives and indications of societal impact in their assessment exercises. Other steps funders are taking are allowing more types of research output (such as preprints) in applications and funding different types of research (such as replication studies).

Learning objectives

- Recognise the key elements of open peer review and their potential advantages and disadvantages

- Understand the differences between types of metrics used to assess research and researchers

- Engage with the debate over the way in which evaluation schema affect the ways in which scholarship is performed

Key components

Knowledge

Open peer review

Popular venues for OPR include journals from publishers like Copernicus, Frontiers, BioMed Central, eLife and F1000research.

Open peer review, in its different forms, has many potential advantages for reviewers and authors:

Open identities (non-blinded) review fosters greater accountability amongst reviewers and reduces the opportunities for bias or undisclosed conflicts of interest.

Open review reports add another layer of quality assurance, allowing the wider community to scrutinize reviews to examine decision-making processes.

In combination, open identities and open reports are theorized to lead to better reviews, as the thought of having their name publicly connected to a work or seeing their review published encourages reviewers to be more thorough.

Open identities and open reports enable reviewers to gain public credit for their review work, thus incentivising this vital activity and allowing review work to be cited in other publications and in career development activities linked to promotion and tenure.

Open participation could overcome problems associated with editorial selection of reviewers (e.g., biases, closed-networks, elitism). Especially for early career researchers who do not yet receive invitations to review, such open processes may also present a chance to build their research reputation and practice their review skills.

There are some potential pitfalls to watch out for, including:

Open identities removes anonymity conditions for reviewers (single-blind) or authors and reviewers (double-blind) which are traditionally in place to counteract social biases (although there is not strong-evidence that such anonymity has been effective). It’s therefore important for reviewers to constantly question their assumptions to ensure their judgements reflect only the quality of the manuscript, and not the status, history, or affiliations of the author(s). Authors should do the same in receiving peer review comments.

Giving and receiving criticism is often a process fraught with unavoidably emotional reactions - authors and reviewers may subjectively agree or disagree on how to present the results and/or what needs improvement, amendment or correction. In open identities and/or open reports, the transparency could exacerbate such difficulties. It is therefore essential that reviewers ensure that they communicate their points in a clear and civil way, in order to maximise the chances that it will be received as valuable feedback by the author(s).

Lack of anonymity for reviewers in open identities review might subvert the process by discouraging reviewers from making strong criticisms, especially against higher-status colleagues.

Finally, given these issues, potential reviewers may be more likely to decline to review.

Open metrics

The San Francisco Declaration on Research Assessment (DORA) recommends moving away from journal based evaluations, consider all types of output and use various forms of metrics and narrative assessment in parallel. DORA has been signed by thousands of researchers, institutions, publishers and funders, who have now committed themselves to putting this in practice. The Leiden Manifesto provides guidance on how to use metrics responsibly.

Regarding Altmetrics, Priem et al. (2010) advise that altmetrics have the following benefits: they accumulate quicker than citations; they can gauge the impact of research outputs other than journal publications (e.g. datasets, code, protocols, blog posts, tweets, etc.); and they can provide diverse measures of impact for individual objects. The timeliness of altmetrics presents a particular advantage to early-career researchers, whose research-impact may not yet be reflected in significant numbers of citations, yet whose career-progression depends upon positive evaluations. In addition, altmetrics can help with early identification of influential research and potential connections between researchers. A recent report by the EC’s Expert Group on Altmetrics (Wilsdon et al. (European Commission), 2017) identified challenges of altmetrics, including lack of robustness and susceptibility to ‘gaming’; that any measure ceases to be a good measure once it becomes a target (‘Goodhart’s Law’); relative lack of social media uptake in some disciplines and geographical regions; and a reliance on commercial entities for the underlying data.

Skills

Example exercises

Trainees work in groups of three. Each individually writes a review of a short academic text

Review a paper on a pre-print server

Use a free bibliometrics or altmetrics service (e.g. Impactstory, Paperbuzz, Altmetric bookmarklet, Dimensions.ai) to look up metrics for a paper, then write a short explanation of how exactly various metrics reported by each service are calculated (it’s harder than you’d assume; this would get at the challenges of finding proper metrics documentation for even the seemingly most transparent services)

Questions, obstacles, and common misconceptions

Q: Is research evaluation fair?

A: Research evaluation is as fair as its methods and evaluation techniques. Metrics and altmetrics try to measure research quality with research output quantity, which can be accurate, but does not have to be.

Learning outcomes

- Trainees will be able to identify open peer review journals

- Trainees will be aware of a range of metrics, their advantages and disadvantages

Further reading

Directorate-General for Research and Innovation (European Commission) (2017). Evaluation of Research Careers Fully Acknowledging Open Science Practices: Rewards, Incentives and/or Recognition for Researchers Practicing Open Science. doi.org/10.2777/75255

Hicks et al. (2015) Bibliometrics: The Leiden Manifesto for research metrics. doi.org/10.1038/520429a, leidenmanifesto.org

Peer Review the Nuts and Bolts (2012). A Guide for Early Career Researchers. PDF

Projects and initiatives

Make Data Count. makedatacount.org

NISO Alternative Assessment Metrics (Altmetrics) Initiative. niso.org

Open Rev. openrev.org

OpenUP Hub. openuphub.eu

Peer Reviewers’ Openness Initiative. opennessinitiative.org

Peerage of Science. A free service for scientific peer review and publishing. peerageofscience.org

Responsible Metrics. responsiblemetrics.org

Snowball Metrics. Standardized research metrics - by the sector for the sector. snowballmetrics.com